Beyond the Prompt: A Guide to Architecting Real-World AI Agents

Anyone who’s worked with a modern Large Language Model (LLM) knows the feeling. You write a few clever sentences, and poof—it generates code, outlines a marketing plan, or explains quantum physics. It feels like magic.

But that initial spark of magic is just the beginning.

An autonomous agent that your business can truly rely on—one that makes decisions, interacts with live systems, and delivers predictable value—isn’t born from a single prompt. It’s the result of deliberate engineering and a thoughtful blueprint. It’s the difference between a cool demo and a trusted digital employee.

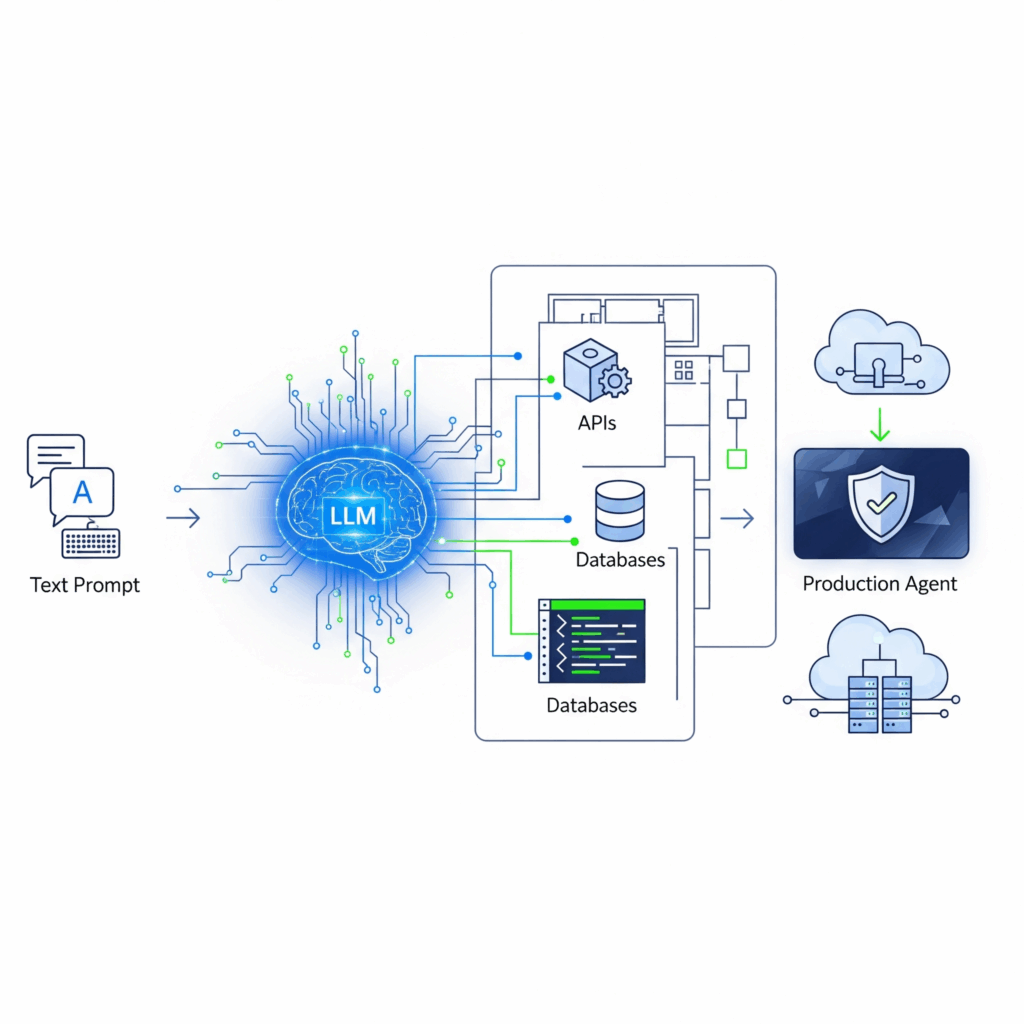

So, how do we bridge that gap? Here is the four-part architecture for building an agent that’s ready for the real world.

1. The Spark (The Core Brain)

Every agent starts with an LLM at its core. This is the “brain” of the operation. This first step involves choosing the right model (a constant trade-off between power, speed, and cost) and mastering prompt engineering to define the agent’s core reasoning skills and personality.

But at this stage, it’s just a brain in a jar—incredibly smart, but with no way to interact with the world.

2. The Toolkit (Giving the Brain Hands and Senses)

An agent is only useful if it can do things. The next step is to give it a “body” by providing a secure toolkit of functions and APIs. This is where solid full-stack development becomes critical. These tools are the agent’s hands and senses, allowing it to:

- Read from and write to your company’s databases.

- Fetch live data from external APIs (like stock prices or weather forecasts).

- Use vector stores for long-term memory and retrieving context.

- Interact with other internal software, like a CRM or ERP.

Without a well-built toolkit, the agent remains trapped in its own mind.

3. The Logic (The Self-Correcting Nervous System)

This is the core architecture that allows the agent to behave autonomously. It’s the “nervous system” that connects the brain to the hands, enabling it to plan, act, and learn in a continuous loop. We design a system where the agent can:

- Plan: Break down a complex goal (“Organize next month’s sales report”) into smaller, manageable steps.

- Execute: Choose the right tool from its toolkit for each step and perform the action.

- Reflect: Observe the outcome of its action. Did it work? Was there an error? This self-correction loop is what truly separates an autonomous agent from a simple, brittle script. It allows the agent to learn from its mistakes and find a new path forward.

4. The Life Support (The Production-Ready Infrastructure)

Finally, to let the agent operate safely and reliably without constant human supervision, we build its “life support” system. This is the robust MLOps infrastructure that ensures performance, reliability, and safety. This includes:

- Scalability: Deploying the agent in containers (like Docker) on a cloud platform so it can handle one request or one million.

- Observability: Implementing comprehensive logging, tracing, and monitoring. This lets you watch its decisions in real-time, track costs, and easily debug any issues that arise.

- Security: Building strict “guardrails” to constrain the agent’s permissions, validate its inputs and outputs, and prevent it from taking unintended or harmful actions.

A great prompt is an idea. A production-ready agent is a complete, living system. Bridging that gap is the real work—and the real reward—of being an AI Agent Architect.

What’s the biggest hurdle you’ve faced moving AI from a Jupyter notebook to a live production environment?

Let’s discuss the real-world challenges in the comments below.

#AIAgents #SystemArchitecture #MLOps #LLM #SoftwareEngineering #AutonomousSystems #ProductionAI #CloudNative